“Great design often stems from strong constraints.”

For a designer, not being able to move your hand is a strong one. This is what happened to me after a wrist injury I got while playing football. Suddenly, the traditional input systems you are used to become irrelevant. No more handles, no more pens, no more mouse. Trying new input systems was the only solution left. Here is a summary of the tools I found out about that can potentially replace the good old mouse.

– Trackball

“Oh your new mouse looks so cool!”

If you think a trackball is a mouse you’re in for a surprise when you try to use it. You move the cursor across the screen by rolling your thumb. This tiny mouse-looking device was a savior. There is a learning curve to it and I found it harder to quickly go through a very specific part of the screen, but it works fantastically to move elements around, something you do a lot when designing.

If you want to learn more about the pros and cons of trackballs, you can check this in-depth article.

– Voice command

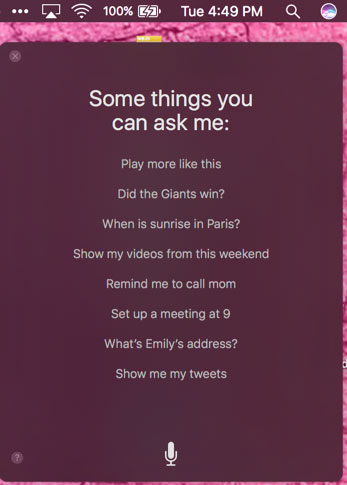

I particularly welcomed the Mac OS X Sierra update that included Siri by default. It became easy to run some typical queries. To be honest, I still faced the usual confusion that you can expect from AI queries in 2016. But compared to my two characters/minute typing, it felt like jumping into a spaceship.

As a designer, this inspired me to incorporate voice commands into services I am working on. After all, there are a lot of people and situations where typing is not the most efficient input method. What if you’re on the go? I for instance use Whatsapp’s voice messaging every time I am on the move and need to focus on where I put my feet. Driving situations are also a good opportunity for hands-free applications.

– Eye command

While visiting Dubai’s GITEX conference, I came across a Tobii stand where they introduced eye-movement control. It tracks your eye movements to act as a cursor. The applications of this are potentially revolutionary. Here is an illustration of how it’s already used in video games.

At the show, the technology was mostly used to enable people with reduced mobility to communicate or even control their home devices. Technically the way it worked was with a screen where you would look at an image of a lamp, or toaster, and after a few seconds it would switch it on/off. Versions of the same platform are used for language processing.

But more ambitious applications of these could involve wearable eye-trackers (think Google Glasses) controlling items in the world around you, or giving you information about it.

Think of this as an improved version of the world as the Terminator saw it back in the 90s, with the added option to control the elements around you.

– Leap Motion

Trying more input systems got me curious about other possibilities out there. Leap motion offers very excited perspectives as you can see in the video below. It demonstrates the latest of their releases, intended for a combined use with VR devices. Although it requires a good mobility, you can easily imagine its great potential for designers.

– Mind control

If your mind is not blown by now you might want to check the works of these researchers at University of Florida. The technology is still in experimental stages, but advanced enough to fly a drone by thought. It works by measuring the brain impulses associated with distinct thoughts: up, down, left, right, slow, fast, etc. It requires a heavy calibration for now, but you can guess this is just the beginning.

Input principles

In 2016 these inputs were still largely ignored but they definitely offer a lot of potential. 2017 might be the year when we start seeing commercial applications gaining momentum. Plenty of designers – including myself – don’t get many opportunities to try these first hand, aside pet projects. Few clients are willing to experiment with technologies that are still in experimental stages.

In that landscape, designers need to learn to identify opportunities to actually implement new input systems. Not every input type is easy to implement, and you need to find the right balance between disruptiveness, context of use, and end-user expectations.

| Input type | ||||

Disrupt experiences now

If you are working on an ambitious project, you might want to consider exploring one or several of these technologies for your experience design. These are readily available at different stages of development and you can use them to save the time of your users. Of course, the most advanced of these will probably be for showroom applications in the short term. But who knows, you might catch the tide at the right time and create a strong experience advantage for your products or services.

Are you already working with these input types? Are you planning to use any in the near future? Let us know in the comments section!